Work Experience

I have been at Plus since November 2024 as a Scene Understanding Software Engineer under the umbrella of the perception team at Santa Clara, California. My primary responsibility involves resarching and executing software solutions that enable a muti-modal, multi-view end-to-end lane tracking models depending on camera, radar, and lidar sensing setups. This often involves a deep-dive into novel algorithms that tackle long-tail of real-world problems in challenging and diverse scenarios to safely enable L4 autonomous driving.

Effective sensor-agnostic lane perception which relies on deep learning techniques enables unparalleled scalability across diverse driving scenarios, thereby supporting Plus’s driverless systems being tested across the world.

Prior to my start at Plus, I was at Aptiv as an Object Tracking Developer at Troy, Michigan. My technical contributions there involved crafting software solutions that facilitate downstream threat assessment algorithms, ensuring dependable multi-modal sensor fusion.

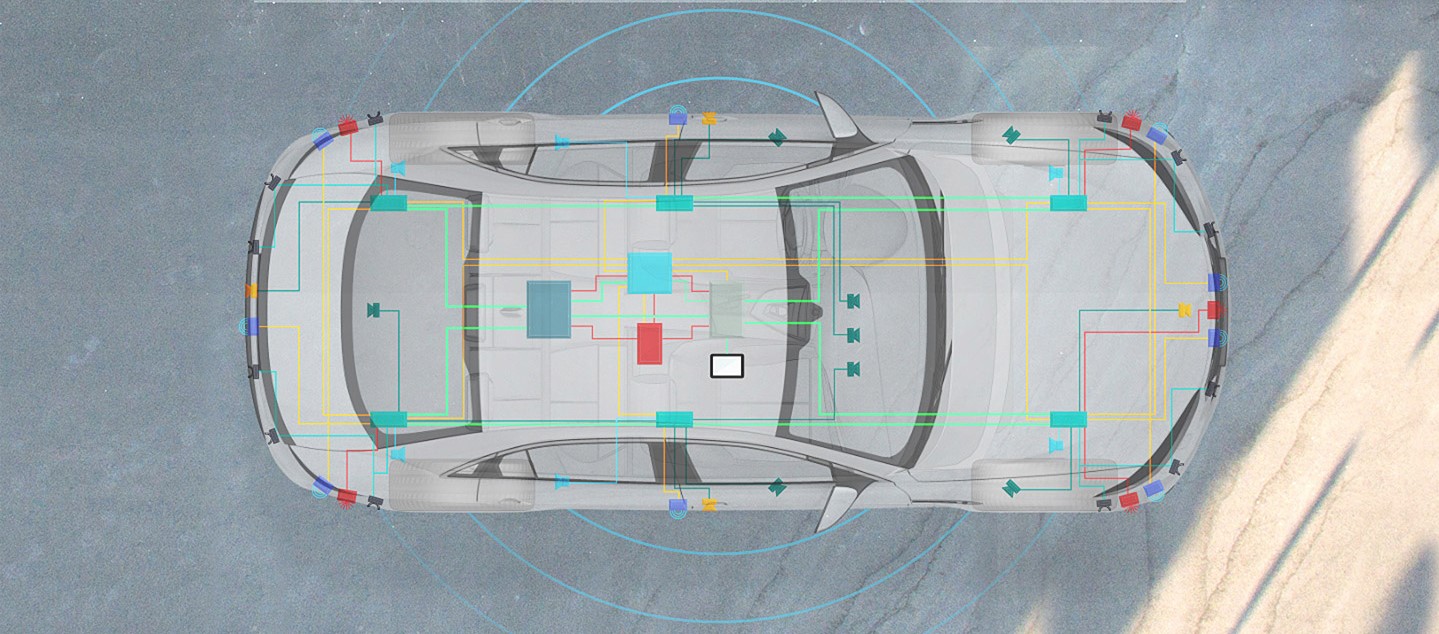

Sensor fusion is the ability to amalgamate inputs from several radars, lidars, and cameras into a unified model or image of the surrounding environment for a vehicle. This resultant model attains heightened accuracy by leveraging the individual strengths of each sensor modality. The insights derived from sensor fusion are harnessed by vehicle systems to drive intelligent automated or semi-automated actions. In the same vein, my role entails the design and execution of multi-target tracking algorithms, drawing inputs from numerous cameras and radars. Broadly speaking, the outcome of object tracking furnishes the host vehicle with comprehensive environmental awareness, encompassing details such as the states and classes of neighboring vehicles, pedestrians, and barriers.

This outcome is subsequently employed to bolster intelligent active driver assistance systems, such as adaptive cruise control, automatic lane-change, pre-collision warning and braking, among others. These advancements aim to reduce driver involvement and pave the way toward greater autonomy.